AGI is Being Achieved Incrementally (OpenAI DevDay w/ Simon Willison, Alex Volkov, Jim Fan, Raza Habib, Shreya Rajpal, Rahul Ligma, et al)

Generative AI VIII: AGI Dangers and Perspectives - Synthesis AI

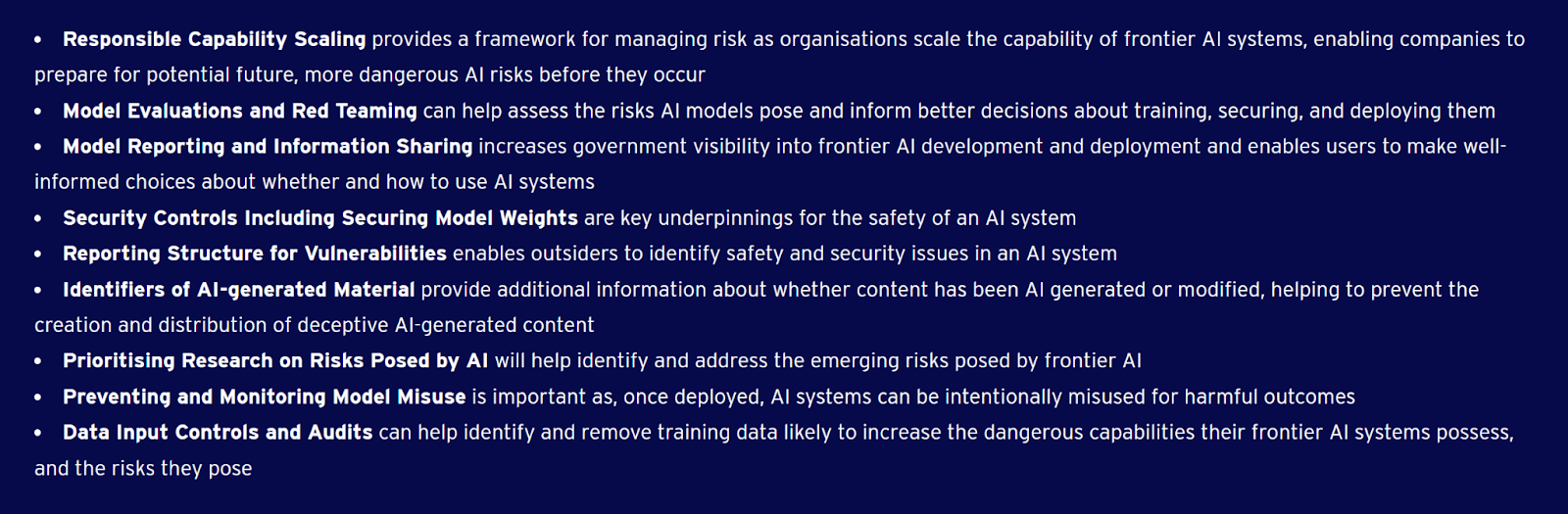

Thoughts on the AI Safety Summit company policy requests and responses - Machine Intelligence Research Institute

What is artificial general intelligence? Why is there no such thing yet? What are the main obstacles to achieve this goal? - Quora

Safety timelines: How long will it take to solve alignment? — LessWrong

Generative AI VIII: AGI Dangers and Perspectives - Synthesis AI

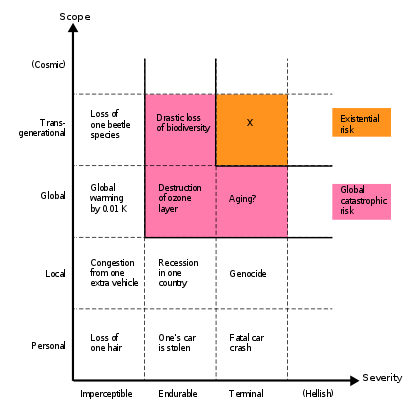

Existential risk from artificial general intelligence - Wikipedia

OpenAI's Superalignment Initiative: A key step-up in AI Alignment Research

Will AGI Systems Undermine Human Control? OpenAI, UC Berkeley & Oxford U Explore the Alignment Problem

The Alignment Problem: Machine Learning and Human Values by Brian Christian

AGI safety — discourse clarification, by Jan Matusiewicz

What is 'AI alignment'? Silicon Valley's favorite way to think about AI safety misses the real issues

Some AI research areas and their relevance to existential safety — AI Alignment Forum